The world of virtual characters in games and animated films is on the brink of a major revolution. Thanks to groundbreaking research backed by NVIDIA’s AI, animations can now achieve unprecedented realism—100 times faster than traditional methods!

But what is The secret? A novel approach that applies AI-powered super-resolution to physics simulations, making real-time applications feasible without compromising detail. So, let's explore deepr what is going on.

The Challenge: Realism at a High Cost.

For decades, animators and researchers have relied on physics-based simulations to achieve realistic movements. These simulations can even go as deep as modeling muscle and soft tissue interactions. However, this level of detail comes at a massive computational cost and the sheer complexity of calculating millions of tiny interactions has historically made high-fidelity simulations impractical for real-time applications.

But what if there was a way to bypass this limitation? Enter AI-driven super-resolution.

AI-Powered Super-Resolution for Simulations.

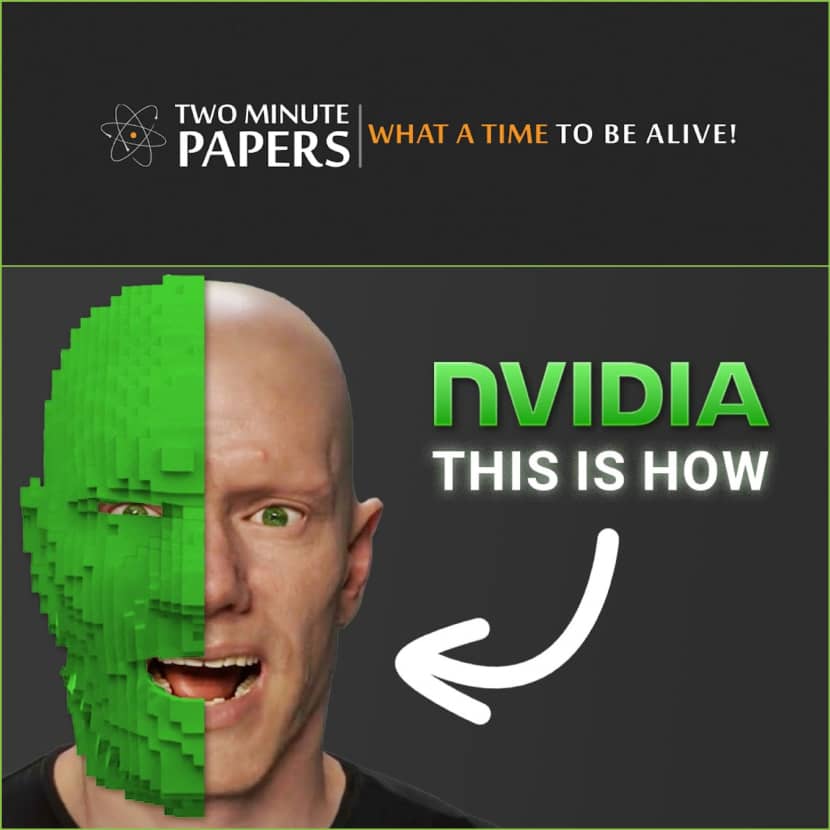

Super-resolution techniques have long been used to enhance images, taking low-resolution inputs and generating high-quality outputs. But NVIDIA’s researchers have taken this concept into the realm of 3D simulation. Their latest AI model can take a coarse, blocky animation and upscale it into an incredibly detailed motion sequence—without the need for hours of intensive computation.

How much faster? The numbers are staggering:

- A simulation that once took an entire night now runs in just five minutes.

- An animation that took one minute to compute is now processed in less than a second.

This dramatic speed boost is a game-changer, making real-time, high-quality animation possible in ways never seen before.

The Genius Behind the Breakthrough.

At first glance, simply upscaling a coarse simulation sounds like a straightforward AI application. However, naive upscaling would result in an entirely different animation, failing to preserve the realistic physics of the original high-resolution simulation.

The research team solved this by training the AI using pairs of low-resolution and high-resolution simulations. This approach allows the AI to learn from detailed motion data, ensuring that its predictions remain true to real-world physics.

The results? Astonishingly accurate animations that closely match full-resolution simulations—without the massive computational burden.

Can It Handle Unseen Movements?

One of the most impressive claims of this research is that it generalizes well to unseen expressions and movements. While some results may appear slightly wobbly, the AI has demonstrated an incredible ability to predict tiny muscle deformations that weren’t even present in the training data.

For example, when the model was tested on facial animations, it was able to synthesize realistic nose deformations based on mouth movements—a phenomenon that wasn't explicitly programmed into the system. This hints at the potential for even more sophisticated AI-driven simulations in the near future.

The Future of Virtual Characters.

The implications of this research extend far beyond individual character animations. Imagine fully simulated characters with realistic muscle structures and facial expressions—all running in real-time.|

This breakthrough lays the foundation for a future where digital characters move, interact, and express emotions as naturally as real humans. The technology could soon find applications in:

- Game development: Real-time character animations without pre-baked motion capture.

- Film and VFX: Faster production times without sacrificing realism.

- Virtual reality: Fully interactive, physics-accurate avatars in immersive worlds.

A New Era in Animation.

As Two Minute Papers' Dr. Károly Zsolnai-Fehér aptly puts it: "Research is a process. Do not look at where we are—look at where we will be two more papers down the line."

With NVIDIA’s AI paving the way for ultra-fast, high-fidelity virtual characters, the future of animation is closer than ever. The dream of real-time, physics-accurate digital humans is no longer a far-off fantasy as It seems to be happening right now.

For more information and details watch the video below!

Two Minute Papers is a YouTube channel dedicated to making recent scientific research accessible and engaging to a broad audience. Hosted by Dr. Károly Zsolnai-Fehér, the channel offers concise and informative summaries of cutting-edge studies in fields such as artificial intelligence, computer graphics, and machine learning. Each episode distills complex topics into easily digestible content, aiming to ignite curiosity and appreciation for the latest advancements in science and technology.